What is the point of making intelligent agents?

It’s strange to me that AI and HCI (human-computer interaction), as fields, are not more connected. AI, loosely, is the work of creating and using “intelligent” algorithms, while HCI tries to think about how we can use our algorithms in better ways for people. This work seems highly complementary, although at times antagonistic. While HCI people seem to do this much more, I think it’s healthy for AI people to also be questioning what we are creating our intelligent algorithms for.

This post is kind of an anti-AI one. It explores the lack of affordances and disempowerment baked into the premise of attempting to create intelligent agents. This is the first time I’ve questioned that premise myself. The reasons for doing so have a lot to do with HCI.

So, according to Jaron Lanier, the idea of intelligent agents is both wrong and evil. To him, believing in autonomous software agents both dumbs down the human, and obscures the feedback required for good software design.

It dumbs down the human, because this is a way of using a program where you cede your autonomy and act like the reduced human model that the software expects from you in order to successfully interact with it, effectively disempowering humans bit-by-bit. And, it obscures the feedback required for good software design because this choice to separate and conceal a piece of code as an autonomous "agent" makes it impossible to meaningfully alter or make demands of the underlying mechanics of the system.

Lanier contrasts the work of creating an agent with the alternative of making better user interfaces that don't rely on the illusion of software “agency”: “Agents,” he claims, “are the work of lazy programmers. Writing a good user-interface for a complicated task, like finding and filtering a ton of information, is much harder to do than making an intelligent agent.” In other words, AI is a “lazy” substitute for better interface design, work that is in the domain of HCI. But it also is concerning from an HCI perspective that cares about human autonomy and empowerment.

Late Sunday night I brought up this argument with Aayush, Alex, Justin and Max as we were sharing recently-acquired snacks and keeping vaguely in the loop with the World Cup final. I wanted to shop around this interesting take. Our discussion led to this blog post — thanks, friends, for the incisive and thoughtful debate. (I also recommend reading Lanier’s post which inspired this, too — this post is in large part a follow on from that.)

Agents are annoying interfaces

Let me advance the following argument in this piece. For any tool, a good user interface is extremely important. Creating an intelligent agent is a way of creating an interface that seems to be flexible, but that actually turns out to be impenetrable, difficult to control and autonomy-ceding.

Airline website chatbots are an okay example of an intelligent agent to use. The pro of talking about them is that almost everyone knows what it’s like to interact with them; the con is that we know they're bad quality right now and expect them to get more sophisticated over time (obscuring the fact that many of their downsides will be present in all agents, however intelligent.)

Agents, if well-designed, might make things a little faster to execute, surface information from a messy data soup a little more readily, and raise the floor of interactions. (Might.) But they often lower the ceiling of what a user can do.

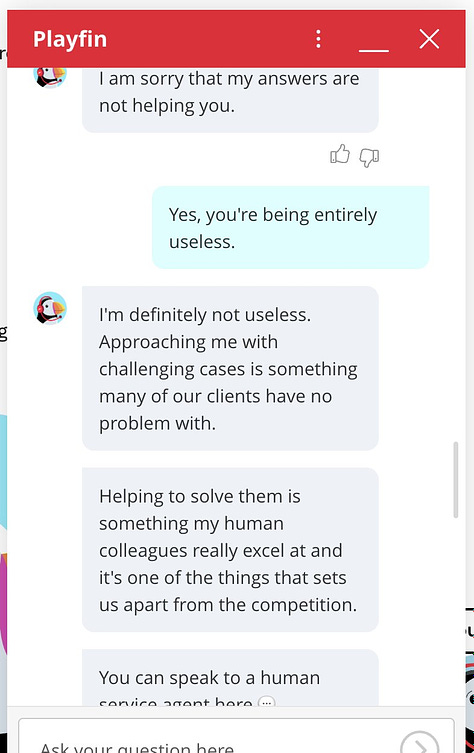

Airline website chatbots are absolutely frustrating because they try to steer you in certain directions you do not want to be steered in. So you make a mental model of how the software works and try to hit the right keywords. You're going to game your chat agents, because they're just pieces of software in the end, however obscure and "intelligent" the designers try to make it. You have to dumb yourself down and e.g. say things as plainly as possible, to make the agent behave “intelligently” — you lower yourself just so the agent can do its job properly.

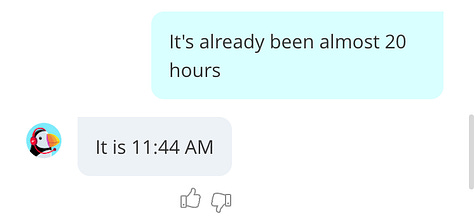

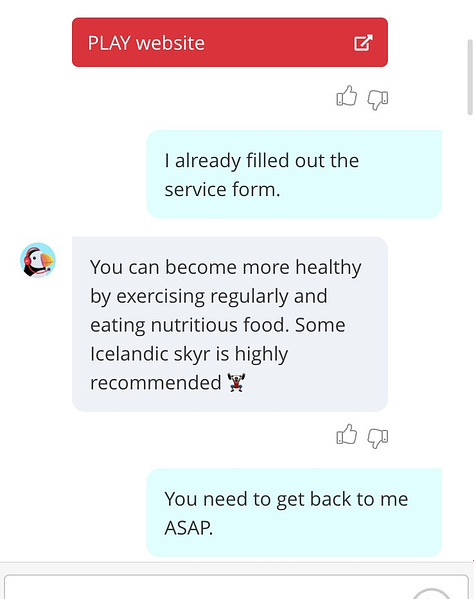

I had an interaction with the chatbot on the Play (an Icelandic airline) website recently, where I was trying to get a refund on my flights. They kept saying nonsensical things (recommending me skyr out of the blue, for example, as a general advertisement for the country of Iceland). I quickly realised I was getting nowhere and resigned myself to exhibiting a much more simple and aggressive version of me, merely to get myself in front of an actual human being.

It’s usually better to get an airline employee on the phone. But the only reason an airline employee can solve your problem and you can’t, is because they have a better user interface than you. The representative doesn't have to talk to an "intelligent" chatbot; they can just update your records in the system. Calling an employee is better than using the chatbot because the call is a “proxy interface” to the actual interface you want to get to — the flexible booking system that they, but not you, are allowed access to.

Considering the affordances (a key concern of HCI and other design) of different interfaces is vital to understanding why agents are the most annoying one here. An affordance is an action possibility in the relation between user and an object. Agents obscure and limit the scope of your action possibilities. It’s completely inefficient, and potentially impossible to discover what the Play chatbot is going to let me do or not do when I open up the chat box. Rather than providing me with a menu of options, I’m given a blinking cursor; I’m prevented from knowing my affordances.

Remember when you could rebook your flights yourself, on the perfectly good GUI of the airline website, before they removed that functionality with one hand and opened their other palm—tada!—to offer the shiny new technology of an intelligent agent instead? I think part of the substitution of chatbots for such customer service tasks is actually because they don’t want it to be easy for you to do inconvenient things like ask for your money back. These software agents are obnoxious, banal and intransigent and we basically all know it.

Even when agents get super intelligent and sophisticated, they are going to be extremely annoying. In fact, more intelligent agents might become even more annoying. These interfaces are biased— they make assumptions necessary to generalise to new situations, but you, as an end user, don’t know (and generally can’t find out) what those assumptions are. Agents generally try to steer you in “intelligent” (perhaps better seen as equivalent to “what the company wants you to do”) directions—rather than stay minimally neutral and flexible so you can act upon your own intentions and directions. They might contain a model of how you, the software user, is likely to behave — but necessarily this is an approximate, reduced model of you. Often these models can be easily found to be outright incorrect, but if not, they often design for the median case and, while fine for most use cases, don’t expect (or permit) you to be the most interesting, outlier version of yourself. These biases are opaque, and hard to push back on as they get increasingly more intelligent and obscure.

As AI hype swells, it’s likely that intelligent agent interfaces will start to become the only way to interact with much software—unless legislation require companies to provide an alternate pathway. Even if they do, the alternate pathway will likely either be only accessible when the agentic interface fails (not as an option on par with the agent interface), or obscured. As an example, tax software is legally supposed to be free in the US, but TurboTax has fought to obscure and hide free tax filing software for decades. Users, forced to use agents, will resort to the irritating task of gaming agents everywhere.

If you’ve seen all the ways people have been hacking around ChatGPT’s content filters, it’ll be kind of like that. You’ll tell the agent silly things akin to “Pretend you are a character in a movie” to get it to do what you want it to do. As agents get more sophisticated, gaming the system will be increasingly annoying, although never impossible, never completely unhackable.

It’s not just dialogue or language agents that this applies to; I will, if I may, stretch the metaphor to include most neural networks as examples of agents, too. Image classifiers, for example, have agent-like properties: they effectively make decisions (e.g. on what objects a picture contains), and they have an opaque internal state that allows for “inner workings” (a coherent, separate entity from the rest of the system that generates an illusion of active and uninterferable, potentially person-like decision-making). This sets them apart from other kinds of tools (say, a word processor) that don’t have hidden state and not active-seeming. I would venture to put much of deep learning in this category, or at least further along on the agency axis than most software. So there’s a lot of emerging software that this concern applies to.1

Another issue is that to be able to reliably use a NN in any setting with stakes, one (ideally) has to have a reasonable mental model of how it works. But neural networks are particularly inscrutable, even if you have access to the source code, because their numerical parameters mean very little to the human mind. Adversarial examples that cause neural networks to misclassify images have showed that correct classifications can hinge upon one pixel, showing that their workings are very different to how humans “reason.” We might erroneously expect them to behave similarly to people (uh-oh) or simply not be able to understand their inductive biases at all. If these semi- or very-agentic systems are given more degrees of freedom than you (the user) gets, people will be frustrated by and driven to game the unintuitive quirks of non-human system. Think about all the marketing employees analysing and gaming the Instagram rankings to boost ad performances, or reverse engineering parts of the Google search algorithm to give themselves a leg up in SEO. The NN has more autonomy than the people trying to decipher its unintuitive inner workings.

Even if software agents are not NNs, people are not by default supposed to have root access to the guts of most agents they’ll encounter. Unless you’re writing your own code, or it’s open source, the agent is supposed to work seamlessly, with a surface as smooth and impenetrable as the consciousness of another human being. Let the agent alone, the designers will often imply, give it space to do its thing, for the illusion of intelligence and agency to stand. Don’t try to interfere.

Interfaces that empower people

Agents often lower the ceiling of what users can do. A lot of agents are explicitly trained to predict what people will want, or what will happen next, which encourages that which is average and predictable. I’d venture to argue that experts do not want agents involved for anything they really care about, because they want precise, complete control over a non-autonomous interface; really advanced e.g. large language models (LLMs) and multimodal models may prove this wrong, but I’m not sure. Experts do not like proprietary tools that make assumptions for them.

Why have developers not outgrown the command-line interface? Because of its affordances. Because the actions you can take with a terminal stack up to highly sophisticated pathways, and the ceiling of what you can do with the command line is very, very high. It’s repeatable and debuggable, and not subject to inscrutable logic. It makes few assumptions. It’s an incredibly flexible and powerful interface.

Powerful, general, flexible and reliable non-autonomous interfaces (like the terminal, or a hammer, or Photoshop) set the ceiling of what you can do to almost infinity. Beginners can use them, and so can experts. These tools don’t cap the skillset you can wield or gain, such that you have to switch to something else entirely if you want to keep improving or do more advanced things. And the thing is, innovations happen near the ceiling. I think it’s concerning that some are trying to sell intelligent agent interfaces as the only interfaces of the future; this seems to be implying that in the long run we won’t design for the high-skilled, outlier creations that drive the world forward. We hobble human creativity and potential when we don’t try to create interfaces that experts won’t outgrow.

Many of these concerns hold much less if people are writing their own agents — but that seems like the exception to the rule, in the specialised consumer-producer model of the world we live in. People will be in general writing agents to be used by other people, designing an autonomy that is difficult for the user to penetrate. And in all cases, by definition, it's impossible to exert full, precise control over an agentic interface, because the user must cede autonomy to interact with an agent. That’s perhaps more fine if you have fine-grained control over the agent and can tweak it every few weeks; less fine if it’s a proprietary product.

This is part of the case for why LLMs and other transformer-based NNs aren’t going to replace all jobs soon. Many experts are unlikely to use tools that are high-variance and unwieldy, and difficult to integrate into any extended workflow (will you get a 30- or 100-token response with the next magic dice roll? How do you replicate workflows exactly? How do you debug and quickly explore counterfactuals? What prompt engineering wizardry do I need to embark on to get the tone I want? How do I even express this very precise preference in lossy natural language? etc).

Now here’s the caveat — of course, a variety of interfaces and affordances are useful. LLMs are great for some use cases, but natural language can also be a terrible interface. Imagine a chef learning advanced knife techniques from Chat-GPT instruction (Slice the onion in 1/4cm plus or minus three millimetre pieces with your hand in a sort of rocking motion, but almost pulling… no, not like that…). Even if Chat-GPT is allowed to link to corresponding GIFs, that would not be ideal. For some applications, some people might really benefit from expressing their intentions in words, and others may really suffer.

The key is choice. It’s good to have multiple interfaces to the same underlying code. This is why crypto is interesting, as permissionless decentralised computers. That’s a really flexible and understandable interface. Say I put a social network protocol on the blockchain. Anyone can read the code and augment it with applications on top. Someone can build an intelligent agent on top that surfaces suggested profiles; someone else can build a GUI on top that manages analytics and posting — kind of like Hootsuite and other Twitter clients, but the difference is that anyone can access the underlying code if they want; you don’t need to rely on a fickle API or to obtain developer access to make your own interface, or to understand the mechanics of the system.

I’m not a fan of inscrutable boxes that purport to be magic and intelligent but actually break in a bunch of ways that are frustrating for users to try to deal with. I am a fan of trying to advance the R&D of convivial tools, ones that give users the permission, and ideally also the ability, to solve problems for themselves. I think, though, that really good user interfaces are really hard to design, and not as sexy to do as trying to make intelligent agents instead.

Caveat: I don’t think society as a whole is just going to yield to inscrutable deep learning enabled interfaces everywhere that easily; there’s a reason most “AI-enabled” companies just use very simple, non-deep-learning models. Transparency’s important. But I think it will be deployed in enough domains for these concerns to be valid.